Test rewrite of the geometry assembler #320

Draft

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

Recently I've been playing around with re-implementing the geometry assembler, and I think it's at the point where we can figure out whether to go with it or not. It's not a clear decision because the results are... mixed. This PR is not meant to be merged, it's just for discussion.

TL;DR:

So this all started when I had an idea a while ago about using LUT's to assemble geometry, because from pulse to pulse you don't need to compute the output location for each pixel in the input, that only depends on the geometry so it can be computed just once and re-used. I went through about 6 iterations of this idea before finding one that I think works well enough to be considered, which is

assembleDetectorData6(). This is a micro-optimized version ofassembleDetectorData5(), which is close enough that I would suggest reading the code forassembleDetectorData5()to understandassembleDetectorData6(). In turn,assembleDetectorData5()is a slightly optimized version ofassembleDetectorData4(). The other attempts I think are not worth considering because they're either broken or too slow.Here's an overview of how it works:

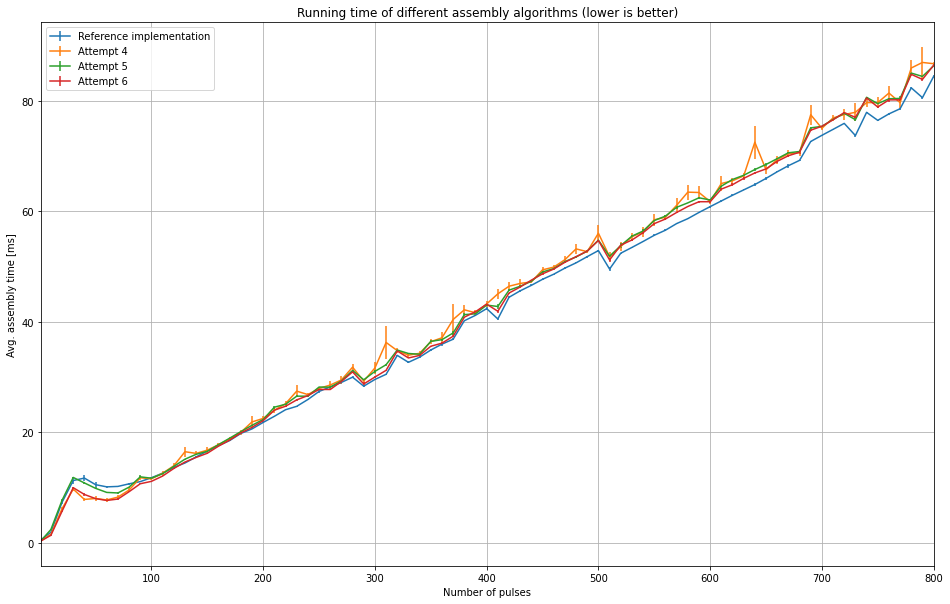

generateAssemblyLUT2()(this requires a specific input array fromextra-geomto compute the LUT data). Let's define the number of pixels in a pulse as N, then the LUT is a 1D byte array of sizeN * 3. Each 3 bytes in the array are the first 3 bytes of a 4-byte int, and the idea is that since we can comfortably represent all possible LUT values (i.e. positions in the flattened output array) in 2^24 bits, we can cut the size of LUT by 25% by using 24-bit ints. This is to reduce the impact of the LUT on the CPU caches, the larger the LUT is the less space for the input and output arrays, and thus the more cache misses and worse performance.uint32is also slightly complicated so there's some comments about that here.Here are some graphs that I made from running this on Maxwell for pulse lengths from 1 - 800 pulses (the 'reference implementation' is the existing implementation):

Interestingly, this outperforms the reference until ~150 pulses, after which it degrades. I would put this down to a quirk of the architecture of the machine rather than anything related to the algorithm because I did not see this while testing on my own machine. It would be interesting to see if this is the case on the online cluster nodes.

This shows that after the initial improvement up to ~150 pulses, performance degrades and then stabilizes at around ~97%-ish the performance of the existing implementation.

And in practice, this translates to a slowdown of about 2ms for 800 pulses. At 400 pulses it's around 1ms, so assuming a linear slowdown then I guess this would translate to 4ms at 1600 pulses (though at that point assembly itself would take ~160ms). It's interesting to me that the performance of

assembleDetectorData4()is so jittery, perhaps it's more sensitive to other workloads on the machine.General thoughts:

Thoughts? (anyone other than the reviewers, feel free to comment too :) )